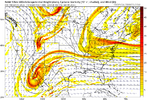

Man… I hope your hopes aren’t actually sky high on these kind of models. Cause as much as you been talking on them, your soul might end up crushed. I’m in your boat in SC, so I’m pulling for them. But man…12z Graphcast also go boom boom. I'm speechless.... here's it and the 00z run for comparison... 00z run completely left the cut off behind. 12z pulled a good portion of it in to the banging northern trough...

That's the difference with all these AI models... they have the final northern wave dropping down HARD, that's the key to a banger.

View attachment 159991View attachment 159992

-

Hello, please take a minute to check out our awesome content, contributed by the wonderful members of our community. We hope you'll add your own thoughts and opinions by making a free account!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Wintry 1/9-12 Winter Potential Great Dane or Yorkie

- Thread starter SD

- Start date

It's intriguing to see the EURO AI and the traditional EURO models offering nearly contradictory solutions for this storm. These two models will undoubtedly be the ones to watch closely, as it’s only a matter of time before one adjusts to align with the evolving pattern.

Blue_Ridge_Escarpment

Member

I could be looking at them totally wrong but with the phase they look too warm in C SC.Man… I hope your hopes aren’t actually sky high on these kind of models. Cause as much as you been talking on them, your soul might end up crushed. I’m in your boat in SC, so I’m pulling for them. But man…

billyweather

Member

Where can you find these maps?

Isn’t what you just described sort of a faster version of what the CMC has been trying to do all weekend?At this rate, that northern central s/w feature that just showed up will need to zip east out, drive a cold front thru the SE and have the Baja Low trail back and ride along that front..

Mitch, what is this, the EuroAI?

Your kinda right. If the trough ends up digging into the southwestern U.S. (Baja region), it tends to pull more warm, moist air from the Gulf farther north, which could overwhelm any cold air trying to push south. This setup often results in a warmer storm track overall, with rain dominating where snow or a wintry mix might have been expected. The key will be how far east the trough shifts and whether strong ridging in the Midwest can suppress the warm air enough to keep things colder. Without that balance, the Baja phase risks tipping the scales toward a milder outcome.I could be looking at them totally wrong but with the phase they look too warm in C SC.

This system is very complex...

Storm Vista has AIFS snow.. it is also available on WxBlender (dm me for beta access).. but the 12z run data doesn't drop until around 3:30. The ECMWF site has the plots at 2:00, but the data doesn't drop until later, so the snow maps won't be available until then.Where's the EuroAI snow map? Storm Vista?

Not seeing any real big dog EPS members makes me thing that a light to moderate event has become the ceiling here, which I think most of us would happily take.

I suppose it's possible that there is some poorly modeled energy somewhere that, when better sampled, will show a higher end outcome.

We trended toward phasing and rain and now toward not phasing and clouds. It seems like in totality, the trends have been unclear.

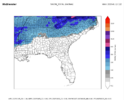

Here's the 6z AIFS snowfall on WxBlender.

Maybe so. I haven’t even been looking at them. Just been feeding off the hyped up energy in here on them.I could be looking at them totally wrong but with the phase they look too warm in C SC.

Thanks

Sent from my iPhone using Tapatalk

There are temperature issues for a lot of these "bombs/buried snow" talk. Unless you see a snow map from the AIFS, that confirms these statements, be very wary about the hype.

That’s the WPC forecast. The big dawgs. Actual humans homie.Mitch, what is this, the EuroAI?

I would think with a SLP near the Gulf Coast, the wind here would be out of the NE. However, east to ese wind is what I see on all deterministic models ATM.Exactly it needs to be NE winds to funnel it in

rburrel2

Member

There are five AI models on the ecmwf product page: Euro AI, graphcast, fourcast, microsoft, and pangu.... all five of them drop the northern wave down hard and creat a very sharp trough and have a strengthening surface low riding up the coast.... this is wild.

All of these are in stark contrast to the Euro and GFS which just have a putrid circular based "trough"(if you can call it that) over the great lakes on Saturday morning. Gonna be really interesting to see who folds.

All of these are in stark contrast to the Euro and GFS which just have a putrid circular based "trough"(if you can call it that) over the great lakes on Saturday morning. Gonna be really interesting to see who folds.

Is this from like 1992? Other tools they put out look a bit more modern lolThat’s the WPC forecast. The big dawgs. Actual humans homie.

That's down from the previous run isn't it?

That's down from the previous run isn't it?

Yes but the last run was unbelievable and had some very large ones. Maybe we’ll go back

rburrel2

Member

Nah, i'm just trying to piece the puzzle together. I had decided the odds of anything but a weakening southern slider were near 0 until I saw the 12z AI runs... now i'm not so sure. I'm back to 50/50 on it.Man… I hope your hopes aren’t actually sky high on these kind of models. Cause as much as you been talking on them, your soul might end up crushed. I’m in your boat in SC, so I’m pulling for them. But man…

Yep we all need some competing factors now. Good luck mods

Let’s see what is in deck for me, rain or no rain. I’m definitely wishcasting the no rain side.

rburrel2

Member

There are always temperature issues somewhere in a storm, but these new AI runs are a good bit farther East with the 850 0 degree isotherm compared to the "phased boom" runs of a couple days ago. I assume because it's happening later on them than what had been depicted back then.There are temperature issues for a lot of these "bombs/buried snow" talk. Unless you see a snow map from the AIFS, that confirms these statements, be very wary about the hype.

Quick note on the AI guidance.. all 5 of them listed score better than GFS in testing. They are trained on analysis data from many years, then tested on a set of years (usually 2020 thru present). The evaluation is usually done using a metric called RMSE, which is also the metric used in training. RMSE/MSE really hammers a model for having drastically wrong forecasts but doesn't hammer as hard for forecasts that are slightly wrong. This essentially leads to most AI models being very accurate synoptically, but underestimating mesoscale extrema. They essentially punish models for taking risks, and leaning into extrama is a risk for the model.There are five AI models on the ecmwf product page: Euro AI, graphcast, fourcast, microsoft, and pangu.... all five of them drop the northern wave down hard and creat a very sharp trough and have a strengthening surface low riding up the coast.... this is wild.

All of these are in stark contrast to the Euro and GFS which just have a putrid circular based "trough"(if you can call it that) over the great lakes on Saturday morning. Gonna be really interesting to see who folds.

So, typically with these models, you'll want to use them like you're using an ensemble mean. Temps will be slightly tended towards seasonal averages.. MSLP will tend towards non-extreme lows/highs.. etc. The real benefit of these models is synoptic-scale feature spatial interpretation... meaning cyclone tracks, large scale setups, etc.

With all of that being said, these models still score better than GFS in testing. GraphCast, AIFS, and Aurora score better than ECMWF op, and very close to EPS (sometimes exceeding EPS).. Pangu scores on par with ECMWF op. FourCastNet scores, generally, worse than ECMWF op (but better than GFS). These models are new, and it can be overwhelming to see so many different models, but they are certainly the future of meteorology. They are incredibly cheap to run compared to physics NWPs, and they keep improving at a rapid pace.

I would love to put together a guide for using these new models at some point. I build models of this genre for a private company, so I have been fully immersed in this emerging field for a few years.

Thanks very much for the detail and sharing your expertise here. This is all pretty brand new to most of us.Quick note on the AI guidance.. all 5 of them listed score better than GFS in testing. They are trained on analysis data from many years, then tested on a set of years (usually 2020 thru present). The evaluation is usually done using a metric called RMSE, which is also the metric used in training. RMSE/MSE really hammers a model for having drastically wrong forecasts but doesn't hammer as hard for forecasts that are slightly wrong. This essentially leads to most AI models being very accurate synoptically, but underestimating mesoscale extrema. They essentially punish models for taking risks, and leaning into extrama is a risk for the model.

So, typically with these models, you'll want to use them like you're using an ensemble mean. Temps will be slightly tended towards seasonal averages.. MSLP will tend towards non-extreme lows/highs.. etc. The real benefit of these models is synoptic-scale feature spatial interpretation... meaning cyclone tracks, large scale setups, etc.

With all of that being said, these models still score better than GFS in testing. GraphCast, AIFS, and Aurora score better than ECMWF op, and very close to EPS (sometimes exceeding EPS).. Pangu scores on par with ECMWF op. FourCastNet scores, generally, worse than ECMWF op (but better than GFS). These models are new, and it can be overwhelming to see so many different models, but they are certainly the future of meteorology. They are incredibly cheap to run compared to physics NWPs, and they keep improving at a rapid pace.

I would love to put together a guide for using these new models at some point. I build models of this genre for a private company, so I have been fully immersed in this emerging field for a few years.

Edit: Would it be fair to say, based on your comments, that a big bomb would be less likely to be depicted on the model? And also, are you saying that temps may be likely to potentially a bit warm in a situation like we have coming up, as they try to revert back to the averages, so to speak? @bouncycorn

accu35

Member

Can’t wait for all the short range models to start showing. Those will be telling.

Thank you so much for the breakdown, this is incredibly helpful!Quick note on the AI guidance.. all 5 of them listed score better than GFS in testing. They are trained on analysis data from many years, then tested on a set of years (usually 2020 thru present). The evaluation is usually done using a metric called RMSE, which is also the metric used in training. RMSE/MSE really hammers a model for having drastically wrong forecasts but doesn't hammer as hard for forecasts that are slightly wrong. This essentially leads to most AI models being very accurate synoptically, but underestimating mesoscale extrema. They essentially punish models for taking risks, and leaning into extrama is a risk for the model.

So, typically with these models, you'll want to use them like you're using an ensemble mean. Temps will be slightly tended towards seasonal averages.. MSLP will tend towards non-extreme lows/highs.. etc. The real benefit of these models is synoptic-scale feature spatial interpretation... meaning cyclone tracks, large scale setups, etc.

With all of that being said, these models still score better than GFS in testing. GraphCast, AIFS, and Aurora score better than ECMWF op, and very close to EPS (sometimes exceeding EPS).. Pangu scores on par with ECMWF op. FourCastNet scores, generally, worse than ECMWF op (but better than GFS). These models are new, and it can be overwhelming to see so many different models, but they are certainly the future of meteorology. They are incredibly cheap to run compared to physics NWPs, and they keep improving at a rapid pace.

I would love to put together a guide for using these new models at some point. I build models of this genre for a private company, so I have been fully immersed in this emerging field for a few years.

What's the state of short-range/mesoscale AI guidance? Do many of these AI models have short-range spinoffs? I'm sure it's being explored, but is it as robust as these "globals?"

Yes, a big anomalous bomb COULD be depicted on the model, but would be less likely to be depicted on the model. This is especially true for transformer based architectures (FourCastNet, Pangu, Aurora).. less so for graph based architectures (AIFS, GraphCast). We saw this during hurricane season, the models did magnificently for cyclone tracks (better than any physics models), but consistently underestimated intensity of the cyclones. You could see something where the orientation of the cyclone appears bomb-like (or even shows some intensification), but the actual intensification is vastly underestimated.Thanks very much for the detail and sharing your expertise here. This is all pretty brand new to most of us.

Edit: Would it be fair to say, based on your comments, that a big bomb would be less likely to be depicted on the model? And also, are you saying that temps may be likely to potentially a bit warm in a situation like we have coming up, as they try to revert back to the averages, so to speak? @bouncycorn

Stormlover

Member

Northeast Alabama WAFF First Alert Weather

1h ·**UPDATE** Next Friday storm setup. The overall setup continues to come together but we are still several days out. Long range models continue to show a surface low in the northern Gulf of Mexico late Thursday night into Friday morning. The current forecast track is one that has a history of producing significant snowfall for North Alabama. The exact track of this surface low pressure system will determine who gets snow, freezing rain/sleet or just rain. If the low tracks farther north the chance for wintry weather over North Alabama will decrease significantly. One thing to keep in mind is that we will have nearly a week of freezing temperatures in advance of any moisture that falls so if we end up freezing rain and sleet we could see travel issues develop quickly early Friday. Don't cancel any plans yet just keep checking back and we will keep you updated. #alwx @followers #snow

See less

Thank you. Does what I said about temps hold water as well?Yes, a big anomalous bomb COULD be depicted on the model, but would be less likely to be depicted on the model. This is especially true for transformer based architectures (FourCastNet, Pangu, Aurora).. less so for graph based architectures (AIFS, GraphCast). We saw this during hurricane season, the models did magnificently for cyclone tracks (better than any physics models), but consistently underestimated intensity of the cyclones. You could see something where the orientation of the cyclone appears bomb-like (or even shows some intensification), but the actual intensification is vastly underestimated.

Probably less of an issue with temps. Temps in the range we are expecting (20-40F) aren't really rare in the model's training set.Thank you. Does what I said about temps hold water as well?

i've been out. haven't seen any models. one sentence summary? seems like we held serve?

Only thing I would say is that if this storm doesn't work out though, we can't go just credit the Euro/EPS either (at least not at this point). The Euro / EPS was originally super suppressed / no storm (that was when GSP NWS put out the AFD with "At this point, the chance for snow east of the mountains is close to zero" and "All in all, the extended is dry, cold, and snow-less" - that was on Friday afternoon).Man… I hope your hopes aren’t actually sky high on these kind of models. Cause as much as you been talking on them, your soul might end up crushed. I’m in your boat in SC, so I’m pulling for them. But man…

The Euro/EPS flipped from suppressed / no storm all the way to fully incorporating the baja wave and amp-ing it up into a sizeable storm, but with most too warm, to now going back more suppressed (weaker storm).

Links to the posts on this...

iGRXY

Member

Here's what I will say about this, we have gone from amped and warm to less phasing and suppression. We still have 5 days to go so this is nowhere near resolved. If I had to guess we probably meet somewhere in the middle.

rburrel2

Member

12z Models moved towards weaker/slider solutions(more leaving the cut off behind and less northern stream digging).. to the point of precip concerns for everyone East of the mountains, and 2-4 inches of snow maybe being the best case scenario for who-ever "jackpots". Lots more complete whiffs/no storm solutions on individual ensemble members.i've been out. haven't seen any models. one sentence summary? seems like we held serve?

Side note: AI models trended the opposite direction, but also fairly cold,(we hug them for now).

Here is what SV shows for snow on the 12z Euro AI run

This storm starts before hr120 now and is completely gone by hour 135 (except Can Op). Jury decession is coming down over next cycle or two. Saddle up

Blue_Ridge_Escarpment

Member

Pops

Member

Yep!

This storm starts before hr120 now and is completely gone by hour 135 (except Can Op). Jury decession is coming down over next cycle or two. Saddle up

NWS disagrees:

Hopefully guidance continues to converge on at least low track so that

portion of the forecast can get reasonably cleared up but

specifics about precip type, timing, etc. will all take several

days to even have a decent idea.

Keep in mind that this is the initial short wave that drops into the baja wave that prevents it from staying....in baja. But all eyes down the road on the subsequent wave / trough that drops into the plains and the nature of how it interacts with the baja wave that has kicked east

Blue_Ridge_Escarpment

Member

Yes but if the Baja wave ejects we have two options to score. If held back, we solely relying on the NS which I’m extremely leery ofKeep in mind that this is the initial short wave that drops into the baja wave that prevents it from staying....in baja. But all eyes down the road on the subsequent wave / trough that drops into the plains and the nature of how it interacts with the baja wave that has kicked east

️

️